We are excited to announce the successful completion of the “5G Networks as an Enabler for Real-time Learning in Sustainable Farming” project, supported by partial funding from the Ministry of Economic Affairs, Industry, Climate Action, and Energy of the State of North Rhine-Westphalia.

This initiative represents a significant step forward in exploring the transformative potential of 5G technology in agriculture, specifically aimed at enhancing the ecological, economic, and sustainable aspects of sugar beet cultivation.

It leveraged the low latency of 5G to integrate advanced information technology systems in real-time, enabling immediate responses to sensor and positional data within predefined timeframes.

Project Focus and Partnership

In collaboration with partners at HSHL and with the support of Pfeifer & Langen, the project focused on studying the entire lifecycle of sugar beet cultivation in fields belonging to the partners. It aimed to demonstrate how 5G could serve as a pivotal technology catalyst within North Rhine-Westphalia’s agricultural sector, showcasing its potential as an enabler for innovation and efficiency.

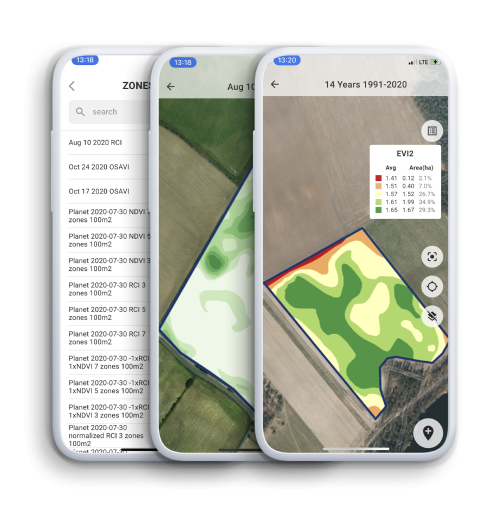

Role of GeoPard Agriculture

GeoPard Agriculture played a crucial role in defining and implementing key aspects of the project, including scenarios for plant detection, monitoring, and production prediction. We developed a prototype AI system tailored for the 5G agricultural environment, executed models within a cloud infrastructure, and created a mobile application for real-time interaction with cloud-based models.

Technological Integration

Artificial intelligence (AI) methods were deployed via a robust cloud infrastructure with high computing capabilities. AI algorithms categorized plants in real-time during each crossing and monitored their growth throughout their entire lifecycle, eliminating the need for unnecessary field visits solely for data collection purposes.

This advancement enabled the precise application of fertilizers and crop protection products, dynamically adjusting application rates during crossings through machine learning algorithms.

Deployment of Unmanned Vehicles

Furthermore, the project utilized the reduced latency of 5G to deploy unmanned vehicles for plant monitoring and data collection. These vehicles played a crucial role in gathering real-time insights, and further optimizing agricultural practices.

Project Outcomes: Enhancing Sugar Beet Production with 5G Technology

The project demonstrated how 5G technology could serve as a transformative enabler in North Rhine-Westphalia’s agricultural sector by analyzing the entire lifecycle of sugar beet cultivation, highlighting substantial improvements facilitated by 5G technology. However, to efficiently demonstrate the project outcomes, the researchers have used work packages containing different scenarios and infrastructures.

Scenario Definition Considering Existing Geodata and ML Infrastructure

The project demonstrated how traditional processes within the sugar beet production lifecycle could be enhanced through the integration of 5G technology. Key objectives included:

- Developed ready-to-implement scenarios for plant recognition, monitoring, and production prediction.

- Established technical requirements necessary for the successful deployment of these scenarios.

- Identified and assessed relevant ecological and economic indicators to evaluate the added value brought by the 5G network.

This phase underscored the project’s commitment to integrating cutting-edge technology with existing agricultural practices. This architecture leveraged the high-speed connectivity of the 5G network to facilitate real-time data collection and processing between edge devices and the cloud. The cloud infrastructure provided essential resources for training and deploying large-scale AI models, while the AI platform offered robust tools for model development and deployment. The application layer presented actionable insights derived from AI models to end-users, enhancing decision-making capabilities.

Machine Learning and AI in the Context of 5G

The focus of this part was to adapt existing machine learning and AI systems to align with the scenarios outlined above, optimizing them accordingly. Key objectives included:

- Define system’s goals and develop the architecture of the system

- Collected ground truth data for training and validating AI models.

- Established and annotated a suitable database tailored for plant identification and monitoring.

- Integrated AI models seamlessly into the 5G network infrastructure.

In this phase, edge devices equipped with mobile phone SIMs utilizing 5G technology played a crucial role. Key performance indicators (KPIs) such as latency or end-to-end (E2E) latency were monitored closely. Measurements included assessing the reliability and availability of data packets received accurately, along with analyzing user data rates and peak data rates.

Furthermore, assumptions were made based on streaming UHD resolution video in MP4 format, transmitted via Transmission Control Protocol (TCP). Potential solutions explored included optimizing with single images instead of continuous video streams, performing base optimizations directly on edge devices, and implementing model quantization techniques to enhance efficiency.

Cloud Infrastructure and AWS Services

The project relied heavily on cloud infrastructure leveraging AWS services such as Lambda, SageMaker, S3, CloudWatch, and RDS, which played a critical role in providing the necessary resources for training and deploying AI models.

AWS Lambda was employed for efficient instance management and application serving, while AWS SageMaker facilitated the construction of robust machine learning pipelines. Storage solutions such as S3, CloudWatch, and RDS were essential for storing datasets and logs crucial for the operation of machine learning models and neural networks.

Hence, this infrastructure supported the real-time data processing capabilities enabled by the 5G network.

5G Network Latency

5G networks were designed to achieve ultra-low latency, typically ranging from 1 to 10 milliseconds. This latency reflected the time taken for data to travel between mobile devices and AWS servers via the 5G network. Device-specific processing capabilities, such as the speed of capturing and processing photos on smartphones with high-performance processors, also influenced latency.

Data upload speeds on the 5G network and the size of the photo impacted data transfer times to AWS. AWS further contributed to latency with processing times for tasks like neural network-based detection and segmentation, which varied based on algorithm complexity and AWS service efficiency. After processing, results were downloaded back to mobile devices, influenced by the download speed of 5G and the size of the result data.

Plant Recognition Using AI

In the realm of plant recognition, AI-driven processes involved creating a comprehensive database of plant images for training algorithms based on the neural networks. These algorithms were trained to distinguish sugar beet species from other plants by recognizing features specific for that particular plant type such as leaf shapes, flower colors, etc.

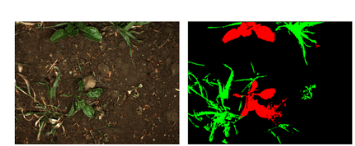

Here, by plant recognition we mean the task of weed detection and sugar beet plants segmentation.

- Weed Detection

For weed detection, the project employed MobileNet-v3, which was trained with extensive data augmentations and weighted sampling. This model achieved an impressive accuracy of 0.984 and an AUC of 0.998.

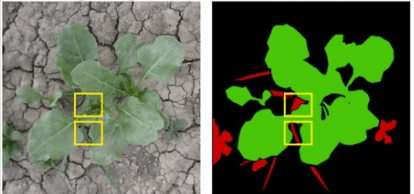

- Sugar Beet Segmentation

For segmentation tasks, models like YOLACT, ResNeSt, SOLO, and U-net were employed to precisely delineate individual sugar beet samples within images. Then the most efficient model was chosen based on the different criteria: speed, inference time, etc. Data for segmentation was sourced from drone-captured RGB images, which were resized and annotated for training and validation purposes.

Segmentation tasks involved creating masks that accurately delineated plant boundaries. This method reduced human annotation efforts while optimizing efficiency. By prioritizing the labeling of challenging samples, the model’s performance was significantly enhanced. Iterative retraining and uncertainty sampling strategies had proven effective, achieving segmentation accuracy rates exceeding 98% across various growth stages.

- Model Evaluation

The model was trained with rigorous data augmentations. The model was evaluated using different metrics including Intersection over Union (IoU). Inference analysis for the built model, conducted on a subset from the ‘plant seedlings v2’ dataset, demonstrated an accuracy of 81%.Inference time took approximately 320 milliseconds to compute after a 7-second initialization period, necessary only once per session.

In plant monitoring powered by artificial intelligence (AI), cameras and sensors captured vital plant data, analyzed by machine learning and AI algorithms. This analysis played a crucial role in assessing plant health, pinpointing stress, diseases, or other factors impacting growth.

Applications extended from optimizing agricultural productivity to monitoring natural ecosystems like forests, aiding conservation efforts, and enhancing understanding of environmental impacts.

Object Detection in Plant Monitoring

The next phase after segmenting sugar beet plants is the object detection aimed to understand specifics of each plant in terms of health, growth and other factors. For object detection in plant monitoring, advanced models such as YOLOv4, MobileNetV2, and VGG-19 with attention mechanisms were deployed. These models analyzed segmented images of sugar beets to detect specific stress and disease areas, enabling precise and targeted interventions.

The project achieved significant milestones in disease detection, training ResNet-18 and ResNet-34 models pre-trained on ImageNet. These models demonstrated an impressive accuracy of 0.88 in identifying diseases affecting sugar beet plants, with an Area Under the ROC Curve (AUC) of 0.898. The models exhibited high prediction confidence, accurately distinguishing between diseased and healthy plants.

The project employed a systematic approach to disease detection, segmenting images into standardized patches. These patches underwent meticulous annotation using interactive tools to pinpoint areas affected by diseases. Object detection further enhanced accuracy by outlining bounding boxes around plants, facilitating precise monitoring of plant health.

Plant Production Prediction

In the domain of plant production prediction, AI models leveraged environmental data such as weather conditions and soil parameters to forecast crop yields. Regression models like Isolation Forest, Linear Regression, and Ridge Regression were employed.

These models integrated numeric features extracted from bounding box regions along with soil data to optimize fertilizer application.

Model Deployment Considerations

Deployment strategies for the developed models were evaluated for both edge devices and cloud platforms. Deploying models on edge devices offered advantages such as reduced costs and lower latency.

However, this approach might trade off potential accuracy due to hardware constraints. On the other hand, cloud deployment offered faster inference times using high-performance GPUs but might incur additional costs and was reliant on internet connectivity, which could introduce communication latency.

Comparative Analysis with 5G Network

A comparative analysis demonstrated that utilizing a 5G network significantly enhanced sugar beet segmentation compared to traditional 4G/WiFi setups. This improvement was evidenced by reduced average setup and network times, highlighting the efficiency gains achieved through 5G technology.

- Data Preparation Process

The data preparation process involved collecting datasets of healthy and diseased plants, detecting weeds, identifying growth stages, and extracting images from 4K raw video. Techniques like histogram equalization, image filtering, and HSV color space transformation were used to prepare the data for analysis.

Samples of healthy sugar beet leaves and diseased samples, such as corn leaves with Grey Leaf Spot, were collected. Disease feature extraction involved separating the leaf from the background, resizing, transforming, and merging images to create realistic samples for analysis.

- Active Learning Loop

An active learning loop was initiated with unlabeled data, utilized to train detection models. These models generated annotation queries that were addressed by human annotators, continually refining the model’s accuracy through iterative training and annotation cycles.

- Data Annotation via Multimodal Foundation Model

Addressing the challenge of limited labeled data, the project leveraged robust foundation models to generate ground truth annotations. Notably, CLIP, a transformer-based model developed by OpenAI, trained on a vast dataset of over 400 million image-text pairs, played a pivotal role.

Utilizing Vision Transformers as its backbone, CLIP achieved a remarkable 95% accuracy on validation sets, proficiently categorizing images into distinct classes such as sugar beet and weed with high precision.

- Drone Technology for Data Collection

One of the critical technologies employed in the project was the use of drones equipped with RGB cameras that captured 4K video. These drones provided detailed images (3840×2160 resolution) for analysis.

Pre-processing these images significantly boosted model accuracy, with notable improvements observed in models like VGGNet (+38.52%), ResNet50 (+21.14%), DenseNet121 (+7.53%), and MobileNet (+6.6%).

Techniques such as histogram equalization were used to enhance image contrast, while transformation into HSV color space helped emphasize plant areas and highlight relevant features.

- Synthetic Data Generation

To address the challenge of limited image data, synthetic datasets were generated via machine learning and AI. Data collection was performed using drones flying at heights between 1m to 4m and speeds of 2m/s or more, utilizing RGB cameras.

Other vehicles, such as tractors, were also employed for data collection. This synthetic data generation proved particularly beneficial for detecting sugar beet diseases.

Conclusion

The “5G Networks as an Enabler for Real-time Learning in Sustainable Farming” project successfully demonstrated how 5G technology can enhance the ecological, economic, and sustainable aspects of sugar beet cultivation. Through collaboration with HSHL and Pfeifer & Langen, the project integrated real-time data collection and AI-driven analysis, improving efficiency and reducing unnecessary field visits.

A dedicated 5G campus network enabled precise applications of fertilizers and crop protection products. Geopard Agriculture played a crucial role in developing plant detection and monitoring scenarios, and creating a prototype machine learning system for the 5G agricultural environment. The project’s success underscored the importance of advanced technologies in sustainable farming, highlighting 5G’s potential to drive innovation and efficiency.

Precision Farming